BLOG: Smart programming for robots

March 11, 2015

BLOG: New Metropolis

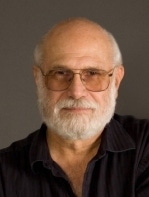

C.G Masi

It doesn't, at first, sound like it, but the title of this post is a mind numbingly stupid (yes, another pun) pun. I'll explain it later.

Around 2010, I wrote a novel entitled "Vengeance is Mine!" Toward the end, a Mexican drug cartel stole a mobile robot that an American security company was using in cooperation with the Mexican government's war on drugs. The cartel's intent was to steal the technology and turn it back against the government's efforts. They thought they could do it because the robot had been set up to respond to verbal commands—this verbal programming technology is a major plot driver in the novel.

Things seemed to be going along fine until the thieves managed to get the robot powered up, only to find that it wanted to talk to them! Specifically, it kept asking about different human operators it had worked with before, where were they and why weren't they available to talk to it. The conversation degenerated into a long, involved back-and-forth session as the thief tried to convince the robot that he worked for the security company, while the robot politely and good-naturedly rebuffed all the thief's efforts to be friendly. The robot obviously didn't believe a word the thief was saying.

What the thief didn't know was that the robot knew perfectly well that it had been stolen, had reported the theft to the security-company dispatcher and had been instructed to stall the thieves until help (in the form of a security-company SEAL team) could arrive to rescue it. The estimated response time was seven minutes.

At the time I wrote that, and maybe even today, it sounded like wildly futuristic science fiction. But, it wasn't.

What sounded impossible—that a robot could have the capability to want something—was, in fact, well within the realm of artificial intelligence technology even then. Back in 2002, researchers William Smart (Washington University) and Leslie Kaelbling (Massachusetts Institute of Technology) working under a DARPA grant, published a little paper entitled "Effective Reinforcement Learning for Mobile Robots" in which they set out principles for programming robots for goal-directed self learning.

You see that this blog's title is a stupid pun on the first researcher's name. Ha! Ha!

The crux of the reinforcement-learning technique is to provide the robot's software with a perception that one outcome of an action is better than another. The system—in this case a mobile robot—then prefers to repeat actions that experience has shown to have a more positive outcome in a given situation.

This technology has gotten press recently as articles have appeared in the business-to-business press about human/robot cross training. That is, having humans and robots work together on training exercises where they switch off tasks so that they build congruent mental images, enabling them to work better together in the real world. Tests of the technique have shown statistically significant—in fact very significant—improvements in human/robot team effectiveness.

.

About the Author(s)

You May Also Like